Well, in

this blog I am going to be talking about something that we always had to do at

one point or the other…I used to get frequent requests whenever I was testing

data files loaded into Essbase or Planning, is there a way to test the data

loaded in the system. Many a times data may get kicked out due to some issue

which was not checked ( happens in production environment where you do not

maintain the system and are called for a review) or you may have tried to load

data into a dynamic calc member… Now in a normal file I am talking about

thousands of rows for a given month and you have to validate it…You could do it

manually, but then you would probably be reading this blog on scrolls of

papyrus… So let us see if we can fashion a solution to help in the same.

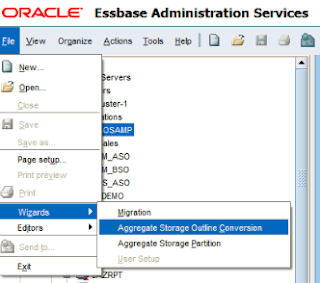

BSOSAMP is

the application I am going to be working on…

This is the

file that I need to load in my application… It is a free form load but can very

well be a file which was loaded using rule files(There is one small thing to

note, if you are doing manipulations in rules files like splitting fields or

adding a prefix and stuff, you may need some more work to get a solution to

work)

So I load

the file into the application and it loads successfully as shown in the below

snapshots.

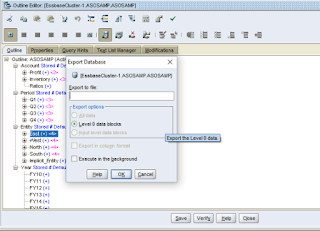

So now I

have loaded the file and data rests in Essbase…Now although the system shows

that data has loaded successfully, I may need to verify the data is present at

level-0 (Generally, you will have to verify that the data rolls up correctly,

but once you understand the example, it is a simple matter of manipulating the

members that are there in the report script that I will show later…On a side

note, there is a way to do rollup verification as well…I will show it later…It

requires the outline XML and some coding in PLSQL…But it is beautiful!!!).

So now I

will show one of the features that I personally feel is not used a lot, Essbase

report scripts. Below is the report script that I have used to extract data

from Essbase.

If you check

the row specification you will find that the columns are in the following

order:-

Account, Entity, Year, Period

This is

similar to the order in which the data was in the source file. Assuming you have

a file that is in a different format, all that you have to do is map the row

specification to the format of your file and you are golden.

On running

the above repot script, you will get a file as shown below (I have used the

console here to show for this demo, you can redirect it to a file as well using

MAXL)

This data is

in the same format as the file that you loaded…Now you can either use VLOOKUP

or you can load the data in Oracle and run a minus query to check mismatch

between Essbase and the data loaded…To each, his own hammer and nails but the

data can be validated much more easily now

[On a side

note, I once worked on a system where we were getting variances during the year

end for HQ values. The business managers were not sure why the data was not

tallying and more so at HQ level. Lot of manipulations led us to a level 0 data

combination that for some reason had doubled the values…That was the crisis

that gave birth to this idea and it has helped me ever since…Using report

scripts for focused data validation]