In this blog, I will be reading through a log file of FDMEE

multi period data load rule. The reason why I choose this log file for analysis

is because it is an interesting read in that it shows exactly how FDMEE works

internally. Also, it shows how it works to import data, map it and finally

export it into a target application.

The import step is as shown in the below snapshot. Both of these snapshots are taken from a previous blog of mine.

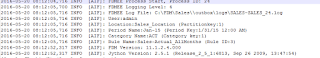

The first section in the log file is as shown in the below

snapshot. It basically lists out the process ID, the user name who initiated

the run, the location, period, category and version of Jython and FDMEE that is

being used and also the file that will be loaded.

The next section in the log file gives the characteristics

of the file that will be loaded along with the processing codes.

I would like to talk about some of the processing codes.

NN is generally if you try to load an alphabet into the

amount field.

ZP is interesting in that it suppresses zero values from

going through into the tables for mapping. So if I have a row with amount

column as zero, it will not go through if ZP is enabled (which is the default

behavior). This is similar to Essbase ASO that does not store zero values. To

ensure that zero values go through for mapping, we make use of the NZP function

for the amount field.

Another interesting thing in the above snapshot, is the Rows

loaded line which shows a value of 60. This is actually calculated as (number

of rows * number of periods). In our case this is 5*12 which gives us 60. This

is ELT part of FDMEE working since we have now conformed the data model into a

relational domain.

A detailed summary of the number of rows imported for each

time period is as shown in then below snapshot.

The next section in the log file indicates the mappings at

place. Since we have very simple mappings of moving data as is, we have only

one entry. However, if we had multiple mappings, we would get a detailed tally

of the number of rows updated by each mapping.

The ETL staging of the data for push to the target

application happens in a bunch of relational tables. Would like to cover it

more in detail later. But this is shown in the below snapshot.

The

validating for data export is as shown in the below snapshot. This lists out

the number of valid rows for data export available for each time period.

The next snippet is of the data being loaded from FDMEE to the

application. Now since the target is Essbase, FDMEE makes use of a rule file

for pushing the data through. This rule file is created using Essbase JAPI and

the push happens using JAPI as well…

No comments:

Post a Comment